I am going to set up a daily backup of a Postgres 9.3

Database on an Ubuntu Server 14.04 server with Postgres 9.3 Installed.

I am going to set up a daily backup of a Postgres 9.3

Database on an Ubuntu Server 14.04 server with Postgres 9.3 Installed.

I have a Postgres 9.3 Database installed on an Ubuntu 14.04

server set up per the instructions I posted at http://www.whiteboardcoder.com/2015/04/install-and-setup-postgres-93-db-on.html

Script to create two databases

If you don't yet have Postgres 9.3 installed on your Ubuntu

14.04 system. It's very easy to install

just run this command.

|

> sudo apt-get

install postgresql

|

This create a postgres user who has admin access to the

postgres server.

Switch over to the postgres user.

|

> sudo su

postgres

|

Then go to the postgres home directory

|

> cd

|

Open a script file.

|

> vi

script.sql

|

And place the following in it.

|

-- Add Users

CREATE USER app_ro WITH PASSWORD 'myPassword';

CREATE USER app_rw WITH PASSWORD 'myPassword';

-- Create First DB

CREATE DATABASE myapp;

-- login to the new DB

\c

myapp

--

Revoke all Privileges

REVOKE

ALL ON DATABASE myapp FROM PUBLIC;

REVOKE

ALL ON SCHEMA public FROM PUBLIC;

-- Set up privileges for app_ro

GRANT

CONNECT ON DATABASE myapp to app_ro;

GRANT

SELECT ON ALL TABLES IN SCHEMA public TO app_ro;

GRANT

SELECT ON ALL SEQUENCES IN SCHEMA public TO app_ro;

GRANT

USAGE ON SCHEMA public to app_ro;

-- Set up privileges for app_rw

GRANT CONNECT ON DATABASE myapp to app_rw;

GRANT

SELECT, UPDATE, INSERT, DELETE ON ALL TABLES IN SCHEMA public TO app_rw;

GRANT

SELECT ON ALL SEQUENCES IN SCHEMA public TO app_rw;

GRANT

USAGE ON SCHEMA public to app_rw;

GRANT

SELECT ON ALL SEQUENCES IN SCHEMA public TO app_ro;

GRANT

USAGE ON SCHEMA public to app_ro;

-- Set up privileges for app_ro (for new tables)

ALTER

DEFAULT PRIVILEGES IN SCHEMA public

GRANT SELECT ON TABLES TO app_ro;

ALTER

DEFAULT PRIVILEGES IN SCHEMA public

GRANT SELECT ON SEQUENCES TO app_ro;

-- Set up privileges for app_rw (for new tables)

ALTER

DEFAULT PRIVILEGES IN SCHEMA public

GRANT SELECT, UPDATE, INSERT, DELETE ON

TABLES TO app_rw;

ALTER

DEFAULT PRIVILEGES IN SCHEMA public

GRANT SELECT, UPDATE ON SEQUENCES TO

app_rw;

--Create

tables

CREATE

TABLE test (id serial, name varchar(255));

-- Create Second DB

CREATE DATABASE myapp2;

-- login to the new DB

\c

myapp2

--

Revoke all Privileges

REVOKE

ALL ON DATABASE myapp2 FROM PUBLIC;

REVOKE

ALL ON SCHEMA public FROM PUBLIC;

-- Set up privileges for app_ro

GRANT

CONNECT ON DATABASE myapp2 to app_ro;

GRANT

SELECT ON ALL TABLES IN SCHEMA public TO app_ro;

GRANT

SELECT ON ALL SEQUENCES IN SCHEMA public TO app_ro;

GRANT

USAGE ON SCHEMA public to app_ro;

-- Set up privileges for app_rw

GRANT CONNECT ON DATABASE myapp2 to app_rw;

GRANT

SELECT, UPDATE, INSERT, DELETE ON ALL TABLES IN SCHEMA public TO app_rw;

GRANT

SELECT ON ALL SEQUENCES IN SCHEMA public TO app_rw;

GRANT

USAGE ON SCHEMA public to app_rw;

GRANT

SELECT ON ALL SEQUENCES IN SCHEMA public TO app_ro;

GRANT

USAGE ON SCHEMA public to app_ro;

-- Set up privileges for app_ro (for new tables)

ALTER

DEFAULT PRIVILEGES IN SCHEMA public

GRANT SELECT ON TABLES TO app_ro;

ALTER

DEFAULT PRIVILEGES IN SCHEMA public

GRANT SELECT ON SEQUENCES TO app_ro;

-- Set up privileges for app_rw (for new tables)

ALTER

DEFAULT PRIVILEGES IN SCHEMA public

GRANT SELECT, UPDATE, INSERT, DELETE ON

TABLES TO app_rw;

ALTER

DEFAULT PRIVILEGES IN SCHEMA public

GRANT SELECT, UPDATE ON SEQUENCES TO

app_rw;

--Create

tables

CREATE

TABLE test_2 (id serial, name varchar(255));

|

This script should create two databases.

- myapp

- myapp2

Two users

- app_rw (That can read and write to either database)

- app_ro (That can only read from either database)

myapp has a table named test

myapp2 has a table named test_2

Save it and run the script with this command

|

> psql -U

postgres -d postgres -a -f script.sql

|

Exit out of the postgres user and run a test to make sure

you can connect to the database.

Run the following command to attach to myapp

|

> psql -h

localhost -U app_rw myapp

|

It will ask for a password.

If you used the script I have above the password is myPassword

Test if it has the table, by running this command in postgres

|

> \d test

|

Quit out of postgres

|

> \q

|

Now try the same thing for the second database.

|

> psql -h

localhost -U app_rw myapp2

|

Test if it has the table, by running this command in

postgres

|

> \d test_2

|

OK it looks good !

Script to load some information

Currently the database I have is empty. I want to load some information into it

before I back it up. With this in mind I

made a script to help me throw some dummy data in it quickly.

This is going to be a python script that does require one

tool that needs to be installed python-psycopg2.

Run the following command to install it.

|

> sudo apt-get

install python-psycopg2

|

Now for the script

|

> vi

insertDB.py

|

Here is my script

|

#!/usr/bin/python

#

#

# Simple Script to insert data

# Into Postgres

import psycopg2

import sys

import time

import random

import string

con = None

try:

# First get the current maximum

con = psycopg2.connect(database='myapp',

user='app_rw', password='myPassword', host='localhost')

cur = con.cursor()

for num in range (1, 10000):

#Adding this random string of length

200 to make the Database bigger faster

#Also I just thought it was cool to

make a random string

randomStr = ''.join(random.choice(string.lowercase)

for x in range(200))

randomStr =

time.strftime("%Y-%m-%d %H:%M:%S") + " " + randomStr

cur.execute("INSERT INTO test (name) VALUES

('" + randomStr + "')")

con.commit()

#If you want feedback uncomment this

#print str(num) + ' inserting ' +

randomStr

#if you are doing some testing

uncomment this so it pauses 1 second

#between inserts

#time.sleep(1)

except

psycopg2.DatabaseError, e:

print 'Error %s' % e

sys.exit(1)

finally:

if con:

con.close()

|

Tweak it to work with your tests. As is it inserts 100,000 records into a

single table. Each string it inserts

starts with a timestamp and ends with a random string.

For Example

2015-07-15 15:14:39 urqduifrvhtanhr

Make it executable.

|

> sudo chmod

u+x insertDB.py

|

And run it.

|

>

./insertDB.py

|

I am running it on a very small VM and it took ~ 2.5 minutes

to insert 100,000 records which added ~ 8 MiBs to the size of my Database

To check your database size you can run a command line this,

after you log back into the database from postgres

|

> SELECT pg_size_pretty(pg_database_size('myapp'));

|

It looks like loading 10K lines this way gives me a Database

of 9KiB in size.

I want ~ 500MiB of

data as a test which comes out to about 600,000 lines

I am going to update my code to insert 750,000 lines

|

for num in range (1, 750000):

#Adding this random string of length

200 to make the Database bigger faster

|

And run it again

|

>

./insertDB.py

|

Test the size again.

|

> SELECT pg_size_pretty(pg_database_size('myapp'));

|

OK that got me 200MiB.

I'll just run it twice more…

And I have 578MiB J

Load data in second database

I am going to tweak the script a little to put data in the

second database "myapp2"

|

> vi

insertDB.py

|

Here is my script

|

#!/usr/bin/python

#

#

# Simple Script to insert data

# Into Postgres

import psycopg2

import sys

import time

import random

import string

con = None

try:

# First get the current maximum

con = psycopg2.connect(database='myapp2', user='app_rw',

password='myPassword', host='localhost')

cur = con.cursor()

for num in range (1, 750000):

#Adding this random string of length

200 to make the Database bigger faster

#Also I just thought it was cool to

make a random string

randomStr =

''.join(random.choice(string.lowercase) for x in range(200))

randomStr =

time.strftime("%Y-%m-%d %H:%M:%S") + " " + randomStr

cur.execute("INSERT INTO test_2 (name) VALUES

('" + randomStr + "')")

con.commit()

#If you want feedback uncomment this

#print str(num) + ' inserting ' +

randomStr

#if you are doing some testing

uncomment this so it pauses 1 second

#between inserts

#time.sleep(1)

except

psycopg2.DatabaseError, e:

print 'Error %s' % e

sys.exit(1)

finally:

if con:

con.close()

|

And run it

|

>

./insertDB.py

|

To check the size run this command from postgres.

|

> SELECT pg_size_pretty(pg_database_size('myapp2'));

|

Back it up and compress it

With Postgres you have two tools you can use to backup your

database. You can use pg_dumpall to

backup the entire Database server or pgdump to just backup a specific Database.

For more information on this check out their documentation

pages

Just as a quick test I

am going to run pg_dumpall and pg_dump on the command line then make sure its

working before I make a simple bash script.

pg_dumpall

Out of the box the only

user who has permission to dump the database is the postgres user so switch

over to that user first!

|

> sudo su

postgres

|

Now dump the database

server

|

> pg_dumpall >

database.dumpall

|

Here is another way to dump it with bzip compression (Compresses as it dumps it)

|

> pg_dumpall |

bzip2 -vf > database.dumpall.bz2

|

That gives me two files one compressed and one not.

In my test case

- uncompressed = 661 MiB

- compressed = 358 MiB (I am sure lots of random data does not compress well J )

pg_dump

Out of the box the only

user who has permission to dump the database is the postgres user so switch

over to that user first!

|

> sudo su

postgres

|

Now dump the database,

but only a named database. In my case I

have a database called myapp.

Now dump the database

(change the database name to your own)

|

> pg_dump myapp > database_1.dump

|

Here is another way to dump it with bzip compression (Compresses as it dumps it)

|

> pg_dump myapp | bzip2 -vf >

database_1.dump.bz2

|

That gives me two files one compressed and one not.

In my test case

- unompressed = 497 MiB

- compressed = 270 MiB

Do the same thing for the other database myapp2

Now dump the database

(change the database name to your own)

|

> pg_dump myapp2 > database_2.dump

|

Here is another way to dump it with bzip compression (Compresses as it dumps it)

|

> pg_dump myapp2 | bzip2 -vf >

database_2.dump.bz2

|

That gives me two files one compressed and one not.

In my test case

- uncompreseed = 164 MiB

- compressed = 89 MiB

Reversing the process

At this point I have four

files

Two are dumpall and two just

a single database dump.

I have another machine with a

fresh install of postgres 9.3 I am going to transfer these files to and attempt

to load them in.

Upload the dumpall

Switch to the postgres user

|

> sudo su

postgres

|

I have a new fresh database I

am going to restore this saved database to.

The pg_dumpall file should restore both databases myapp and myapp2

|

> psql -f /home/patman/database.dumpall

postgres

|

Check it out, log in as the app_ro user

|

> psql -h

localhost -U app_ro myapp

|

Run a few commands to test the upload.

|

> SELECT

pg_size_pretty(pg_database_size('myapp'));

> SELECT

pg_size_pretty(pg_database_size('myapp2'));

|

|

> select * from

test limit 3;

|

Looks good to me.

Re-install the Database

Since I want to test my other scripts is probably best to

just remove and re- install postgres.

I found this post http://stackoverflow.com/questions/2748607/how-to-thoroughly-purge-and-reinstall-postgresql-on-ubuntu

[3] where John Mee and Craig Ringer @craig2ndq

lists an answer on how to do this.

To wipe out the database and re-install.

|

> sudo apt-get

--purge remove postgresql\*

|

Then remove the database files and postgres user.

(I did not remove /etc/postgresql/or /etc/postgresql-common/

as the purge removed them for me… but run these just in case)

|

> sudo rm -r /etc/postgresql/

> sudo rm -r /etc/postgresql-common/

> sudo rm -r

/var/lib/postgresql/

> sudo userdel

-r postgres

> sudo groupdel

postgres

|

Re-install postgres

|

> sudo apt-get

install postgresql

|

Upload the bzip dumpall

Switch to the postgres user

|

> sudo su

postgres

|

I have a new fresh database I

am going to restore this saved database to.

The pg_dumpall file should restore both databases myapp and myapp2. But this file is bzip'd so I need to unzip it

first

|

> bzcat /home/patman/database.dumpall.bz2

| psql postgres

|

Check it out, log in as the app_ro user

|

> psql -h

localhost -U app_ro myapp

|

Run a few commands to test the upload.

|

> SELECT

pg_size_pretty(pg_database_size('myapp'));

> SELECT pg_size_pretty(pg_database_size('myapp2'));

|

Looks good!

Re-install the Database (again)

Since I want to test my other scripts is probably best to

just remove and re- install postgres.

|

> sudo apt-get

--purge remove postgresql\*

|

Then remove the database files and postgres user.

(I did not remove /etc/postgresql/or /etc/postgresql-common/

as the purge removed them for me… but run these just in case)

|

> sudo rm -r /etc/postgresql/

> sudo rm -r /etc/postgresql-common/

> sudo rm -r

/var/lib/postgresql/

> sudo userdel

-r postgres

> sudo groupdel

postgres

|

Re-install postgres

|

> sudo apt-get

install postgresql

|

Upload the table dump

Switch to the postgres user

|

> sudo su

postgres

|

I have a new fresh database I

am going to restore this saved database to.

This time I am going to install the dump files which each contain one

table's data

If you try to run this

command to restore the first database

|

> psql myapp

< /home/patman/database_1.dump

|

You get an error. The database does not exist!

The pg_dump only backs up the

database itself it will not re-create it.

A database needs to exists. Also

it will not create the users for the database… it's basically just the data.

So what do I do?

If I was only concerned about

looking at the data as the postgres user I would just simply create and empty

database and load this data to it.

Run a command like this from

the command line (as a postgres user)

|

> psql -d

postgres -c "CREATE DATABASE myapp"

|

This just creates an empty

database called myapp.

Now retry to load this

database.

|

> psql myapp

< /home/patman/database_1.dump

|

Ahh look some errors because

my roles app_rw and app_ro don't exist!

Check it out, log in as the postgres user

|

> psql -U postgres myapp

|

Run a few commands to test the upload.

|

> SELECT

pg_size_pretty(pg_database_size('myapp'));

> SELECT

pg_size_pretty(pg_database_size('myapp2'));

|

The error on checking the second database is right, since I have

not uploaded it yet.

Upload the second dump file

Run a command like this from

the command line (as a postgres user)

|

> psql -d

postgres -c "CREATE DATABASE myapp2"

|

This just creates an empty

database called myapp.

Now retry to load this database.

|

> psql myapp2 < /home/patman/database_2.dump

|

Check it out, log in as the postgres user

|

> psql -U postgres myapp2

|

Run a few commands to test the upload.

|

> SELECT

pg_size_pretty(pg_database_size('myapp'));

> SELECT

pg_size_pretty(pg_database_size('myapp2'));

|

Looks good.

Fixing the roles

If you are doing a simple dump how do you fix the

roles? You could just remake them by

hand… which seems a pain or you could use pgdumpall --roles-only ! to get the

job done.

From your original database run this command (first switch

to postgres user)

|

> sudo su

postgres

|

Now dump the database

server (this will dump all roles!)

|

> pg_dumpall

--roles-only > database.roles

|

Move the file to the other server and run this to fix your

roles.

|

> psql -f

/home/patman/database.roles

|

One error the postgres user already exists..

Now I can login as one of my users…

|

> psql -h

localhost -U app_ro myapp

|

But I can't see my tables.

The user permissions were in the dump file and since the users did not

exist their permissions where not set.

To fix that… Just drop the databases and reload them like

this.

|

> psql -d

postgres -c "DROP DATABASE myapp"

> psql -d

postgres -c "DROP DATABASE myapp2"

> psql -d

postgres -c "CREATE DATABASE myapp"

> psql -d

postgres -c "CREATE DATABASE myapp2"

> psql myapp < /home/patman/database_1.dump

> psql myapp2 < /home/patman/database_2.dump

|

Now I can login as one of my users…

|

> psql -h

localhost -U app_ro myapp

|

And running this works now

|

> select *

from test limit 3;

|

Re-install the Database (again)

Since I want to test my other scripts is probably best to

just remove and re- install postgres.

|

> sudo apt-get

--purge remove postgresql\*

|

Then remove the database files and postgres user.

(I did not remove /etc/postgresql/or /etc/postgresql-common/

as the purge removed them for me… but run these just in case)

|

> sudo rm -r /etc/postgresql/

> sudo rm -r /etc/postgresql-common/

> sudo rm -r

/var/lib/postgresql/

> sudo userdel

-r postgres

> sudo groupdel

postgres

|

Re-install postgres

|

> sudo apt-get

install postgresql

|

Upload the bzip dump files

This time I will fix my roles off the bat

Switch to the Postgres user

|

> sudo su

postgres

|

Create the two empty databases.

|

> psql -d

postgres -c "CREATE DATABASE myapp"

> psql -d

postgres -c "CREATE DATABASE myapp2"

|

Load your user and roles

|

> psql -f

/home/patman/database.roles

|

Then to upload the two bzip dump files run the following.

|

> bzcat

/home/patman/database_1.dump.bz2 | psql myapp

> bzcat

/home/patman/database_2.dump.bz2

| psql myapp2

|

Check it out, log in as the postgres user

|

> psql -U postgres myapp

|

Run a few commands to test the upload.

|

> SELECT

pg_size_pretty(pg_database_size('myapp'));

> SELECT

pg_size_pretty(pg_database_size('myapp2'));

|

Backup Script

OK, now that the testing is done lets set up a simple backup

script.

I want my script to

·

Backup the whole database using pg_dumpall

·

Use bzip to compress it on the fly

·

Prepend a date to the file

·

Send a message to me in slack when it is

complete

·

Have crontab run it nightly

If you want a good script check out https://wiki.postgresql.org/wiki/Automated_Backup_on_Linux

[4]. I am going to be using a few ideas

from there.

Let me make a backups folder to place my nightly backups in. And make it readable by postgres

|

> sudo mkdir

-p /data/db-nightly-backups

> sudo chown

postgres:postgres /data/db-nightly-backups/

|

Create a scipt

|

> sudo vi

/usr/bin/backup-db

|

And place the following in it

|

#!/bin/bash

#

# Script to backup

local

# Postgres database

# Run via cron job

#

###########################

###########################

#

# Config section

#

###########################

BACKUP_USER='postgres'

BACKUP_DIR="/data/db-nightly-backups"

BZIP=yes

FILE_NAME="myapp.database"

#########################################

#

# Pre Backup Checks

#

#########################################

# Make sure we're

running as the required backup user

if [

"$BACKUP_USER" != "" -a "$(id -un)" !=

"$BACKUP_USER" ]; then

echo "This script must be run as

$BACKUP_USER not "$(id -un)" Exiting." 1>&2

exit 1;

fi;

# Make sure the

$BACKUP_DIR exists

if [ ! -d

"$BACKUP_DIR" ]; then

echo "Directory $BACKUP_DIR does not

exist. Exiting." 1>&2

exit 1;

fi

# Make sure they

can write to $BACKUP_DIR

if [ ! -w

$BACKUP_DIR ] ; then

echo "Directory $BACKUP_DIR cannot be

written to by $BACKUP_USER Exiting." 1>&2

exit 1

fi

#########################################

#

#Timestamp That

pre-pends the backup name

#Timestamp to track

how long backup takes

#

#########################################

DATE_STAMP=`date

+"%Y_%m_%d--%H_%M"`

TIMER_START=`date

+%s`

#########################################

#

# Backup the

Database

#

#########################################

FULL_FILE_NAME=$BACKUP_DIR/$DATE_STAMP"_"$FILE_NAME

if [ $BZIP =

"yes" ]

then

FULL_FILE_NAME=$FULL_FILE_NAME.bz2

echo "Backing up $FULL_FILE_NAME"

if ! pg_dumpall | bzip2 -vf >

$FULL_FILE_NAME; then

echo "pg_dumpall failed.

$FULL_FILE_NAME. Exiting." 1>&2

exit 1

fi

else

echo "Backing up $FULL_FILE_NAME"

if ! pg_dumpall > $FULL_FILE_NAME; then

echo "pg_dumpall failed.

$FULL_FILE_NAME. Exiting." 1>&2

exit 1

fi

fi

#########################################

#

# Confirm

#

#########################################

# If using bzip2

confirm the file is not corrupt

if [ $BZIP =

"yes" ]

then

echo "Confirming BZIP is valid"

if ! bzip2 -tv $FULL_FILE_NAME; then

echo "BZIP backup is corrupt.

$FULL_FILE_NAME. Exiting." 1>&2

exit 1

fi

fi

#########################################

#

# Backup Complete

now notify!

#

#########################################

TIMER_END=`date

+%s`

TOTAL_TIME=$(($TIMER_END

- $TIMER_START))

STR_TIME="$(($TOTAL_TIME

/ 60)) min $(($TOTAL_TIME % 60)) sec"

#Account for

backups taking hours

if (( $TOTAL_TIME

>= 3600 ))

then

STR_TIME="$(($TOTAL_TIME / 3600))

hours $(( min=$TOTAL_TIME / 60, min % 60)) min $(($TOTAL_TIME % 60))

sec"

fi

echo ""

echo

"================================"

echo ""

echo " DATABASE Backup Complete"

echo " Database save at "

echo " $FULL_FILE_NAME"

echo " BACKUP took $STR_TIME"

echo ""

echo

"================================"

exit 0

|

I made a gist for this at

Make it executable

|

> sudo chmod a+x

/usr/bin/backup-db

|

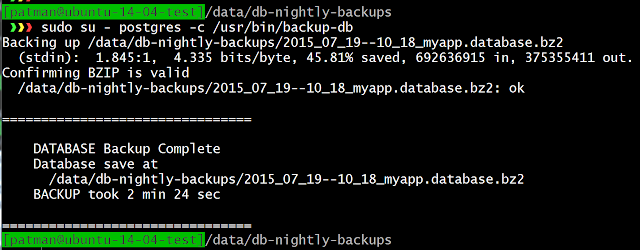

Run it as the postgres user

|

> sudo su - postgres

-c /usr/bin/backup-db

|

Adding Slack goodness

The simple backup script is working, but since I plan to add

it to the /etc/crontab jobs it's going to kinda work silently. I want to fix that by having the script

output to a Slack room I have.

If you don't know anything about slack our how to set up

webhooks in slack to post to rooms check out this post I made on the subject.

Here is what I came up with…

|

#!/bin/bash

#

# Script to backup

local

# Postgres database

# Run via cron job

#

#########################################

#########################################

#

# Config section

#

#########################################

BACKUP_USER='postgres'

BACKUP_DIR="/data/db-nightly-backups"

BZIP=yes

FILE_NAME="myapp.database"

#########################################

#

# Slack Function

#

#########################################

SLACK_URL="PUT

YOUR SLACK URL HERE!!!!"

function

slack_post_good() {

curl -H

"Content-type:application/json" \

-X POST -d \

'{

"channel":"#dev_ops",

"username" :

"Postgres",

"icon_emoji" :

":postgres:",

"attachments" : [

{

"fallback": "DB

backup succeeded",

"color" :

"good",

"fields" : [

{

"title" : "DB

backup succeded",

"value" :

".\nDB back up succeeded\nmsg: '"$1"'",

"short" :

"true"

},

{

"title" :

"'"$2"'",

"value" :

".\n'"$3"'",

"short" :

"true"

}

]

}

]

}

' $SLACK_URL

}

function

slack_post_bad() {

curl -H

"Content-type:application/json" \

-X POST -d \

'{

"channel":"#dev_ops",

"username" :

"Postgre",

"icon_emoji" :

":postgres:",

"attachments" : [

{

"fallback": "Alert!:

DB Backup Failed",

"color" :

"danger",

"fields" : [

{

"title" :

"Alert!: DB Backup Failed",

"value" :

".\nDB back up failed\nmsg: '"$1"'",

"short" :

"true"

}

]

}

]

}

' $SLACK_URL

}

#########################################

#

# Pre Backup Checks

#

#########################################

# Make sure we're

running as the required backup user

if [

"$BACKUP_USER" != "" -a "$(id -un)" !=

"$BACKUP_USER" ]; then

echo "This script must be run as

$BACKUP_USER not "$(id -un)" Exiting." 1>&2

slack_post_bad "This script must be

run as $BACKUP_USER not "$(id -un)""

exit 1;

fi;

# Make sure the

$BACKUP_DIR exists

if [ ! -d

"$BACKUP_DIR" ]; then

echo "Directory $BACKUP_DIR does not

exist. Exiting." 1>&2

slack_post_bad "Directory $BACKUP_DIR

does not exist."

exit 1;

fi

# Make sure they

can write to $BACKUP_DIR

if [ ! -w

$BACKUP_DIR ] ; then

echo "Directory $BACKUP_DIR cannot be

written to by $BACKUP_USER Exiting." 1>&2

slack_post_bad "Directory $BACKUP_DIR

cannot be written to by $BACKUP_USER"

exit 1

fi

#########################################

#

#Timestamp That

pre-pends the backup name

#Timestamp to track

how long backup takes

#

#########################################

DATE_STAMP=`date

+"%Y_%m_%d--%H_%M"`

TIMER_START=`date

+%s`

#########################################

#

# Backup the

Database

#

#########################################

FULL_FILE_NAME=$BACKUP_DIR/$DATE_STAMP"_"$FILE_NAME

if [ $BZIP =

"yes" ]

then

FULL_FILE_NAME=$FULL_FILE_NAME.bz2

echo "Backing up $FULL_FILE_NAME"

if ! pg_dumpall | bzip2 -vf >

$FULL_FILE_NAME; then

echo "pg_dumpall failed.

$FULL_FILE_NAME. Exiting." 1>&2

slack_post_bad "pg_dumpall failed.

$FULL_FILE_NAME."

exit 1

fi

else

echo "Backing up $FULL_FILE_NAME"

if ! pg_dumpall > $FULL_FILE_NAME; then

echo "pg_dumpall failed.

$FULL_FILE_NAME. Exiting." 1>&2

slack_post_bad "pg_dumpall failed.

$FULL_FILE_NAME."

exit 1

fi

fi

#########################################

#

# Confirm

#

#########################################

# If using bzip2

confirm the file is not corrupt

if [ $BZIP =

"yes" ]

then

echo "Confirming BZIP is valid"

if ! bzip2 -tv $FULL_FILE_NAME; then

echo "BZIP backup is corrupt.

$FULL_FILE_NAME Exiting." 1>&2

slack_post_bad "BZIP backup is

corrupt.\nfile: $FULL_FILE_NAME"

exit 1

fi

fi

#########################################

#

# Backup Complete

now notify!

#

#########################################

TIMER_END=`date

+%s`

TOTAL_TIME=$(($TIMER_END

- $TIMER_START))

STR_TIME="$(($TOTAL_TIME

/ 60)) min $(($TOTAL_TIME % 60)) sec"

#Account for

backups taking hours

if (( $TOTAL_TIME

>= 3600 ))

then

STR_TIME="$(($TOTAL_TIME / 3600))

hours $(( min=$TOTAL_TIME / 60, min % 60)) min $(($TOTAL_TIME % 60))

sec"

fi

#Get extra

information about number of database backed up etc.

DB_COUNT=`ls

$BACKUP_DIR | grep ".database." | wc -l`

DB_DIR_INFO=`ls -l

--block-size=M $BACKUP_DIR | grep ".database." | awk '{print $9

" SIZE: " $5 " "}' | sed

's/.$/\\\n/g' | tr -d "\n"`

slack_post_good

"Database backup Complete\nDB_LOC:

$FULL_FILE_NAME\nTIME:

$STR_TIME" \

"$DB_COUNT Databases

in $BACKUP_DIR" \

"$DB_DIR_INFO"

echo ""

echo

"================================"

echo ""

echo " DATABASE Backup Complete"

echo " Database save at "

echo " $FULL_FILE_NAME"

echo " BACKUP took $STR_TIME"

echo ""

echo

"================================"

exit 0

|

Here is the link to the gist https://gist.github.com/patmandenver/6e8fa593ba4e020d1fea

And there is the output all formatted nicely!

Add it to /etc/crontab

Now add the backup

to the /etc/crontab to run it every day.

|

> sudo vi

/etc/crontab

|

And add the

following to the end.

|

####################################

#

# Backup DB cron jobs

# m h dom mon dow user

command

22 2 *

* * postgres

/usr/bin/backup-db

|

This will run the backup every day at 2:22 AM as the user

postgres.

Save it and let it run J

Purge Script

Now you need a purge script.

If you keep backing up your database every night without removing some

files you are going to eventually run out of disk space.

With this in mind I am going to create a simple script to

remove database backup files older than X days. This script will report back to Slack and

will be run nightly as a cron job.

Create a scipt

|

> sudo vi

/usr/bin/purge-old-db

|

And place the following in it

|

#!/bin/bash

#

# Script to purge

old database backups

# Run via cron job

#

#########################################

#########################################

#

# Config section

#

#########################################

BACKUP_DIR="/data/db-nightly-backups"

DAYS_TO_KEEP=15

#########################################

#

# Delete Old

Datbases older than $DAYS_TO_KEEP

#

#########################################

# for testing you

can switch mtime for days to mmin for minutes

NUM_FILES_REMOVED=`find

$BACKUP_DIR -maxdepth 1 -mtime +$DAYS_TO_KEEP

-name "*.database.*" | wc -l`

# for testing you

can switch mtime for days to mmin for minutes

if ! find

$BACKUP_DIR -maxdepth 1 -mtime +$DAYS_TO_KEEP

-name "*.database.*" | xargs -I{} rm -rf {};

then

echo "DB purge failed. Exiting."

1>&2

exit 3

fi

#########################################

#

# purge Complete

now notify!

#

#########################################

echo ""

echo "================================"

echo ""

echo " DATABASE purge Complete"

echo ""

echo " Databases Removed : $NUM_FILES_REMOVED"

echo ""

echo " Only keeping $DAYS_TO_KEEP DAYS"

echo

"================================"

exit 0

|

I put this up as a gist at

Make it executable

|

> sudo chmod a+x

/usr/bin/purge-old-db

|

Run it ( no need to run it as postgres)

|

> sudo

/usr/bin/purge-old-db

|

You'll probably get this since you don't have any older

files..

So let's make a few!

Use touch to make a few dated files

|

> for i in

{1..9}; do sudo touch -d "16 days ago" My_test.database.test$i;

done

|

Now run it again

|

> sudo

/usr/bin/purge-old-db

|

Adding Slack goodness

The simple backup script is working, but since I plan to add

it to the /etc/crontab jobs it's going to kinda work silently. I want to fix that by having the script

output to a Slack room I have.

If you don't know anything about slack our how to set up

webhooks in slack to post to rooms check out this post I made on the subject.

Here is what I came up with.

|

#!/bin/bash

#

# Script to purge

old database backups

# Run via cron job

#

#########################################

#########################################

#

# Config section

#

#########################################

BACKUP_DIR="/data/db-nightly-backups"

DAYS_TO_KEEP=15

#########################################

#

# Slack Function

#

#########################################

SLACK_URL=`cat /etc/init.d/slack_url`

function slack_post_good() {

curl -H

"Content-type:application/json" \

-X POST -d \

'{

"channel":"#dev_ops",

"username" : "ESXi",

"icon_emoji"

: ":esxi:",

"attachments" : [

{

"fallback": "DB Purge Succeeded",

"color" : "good",

"fields" : [

{

"title" : "DB Purge Succeded",

"value" : ".\nDB purge succeeded\nmsg:

'"$1"'",

"short" : "true"

},

{

"title" : "'"$2"'",

"value" : ".\n'"$3"'",

"short" : "true"

}

]

}

]

}

' $SLACK_URL

}

function slack_post_bad() {

curl -H

"Content-type:application/json" \

-X POST -d \

'{

"channel":"#dev_ops",

"username" : "ESXi",

"icon_emoji" : ":esxi:",

"attachments" : [

{

"fallback": "Alert!: DB Purge Failed",

"color" : "danger",

"fields" : [

{

"title" : "Alert!: DB Purge Failed",

"value" : ".\nDB Purge failed\nmsg:

'"$1"'",

"short" : "true"

}

]

}

]

}

' $SLACK_URL

}

#########################################

#

# Delete Old

Datbases older than $DAYS_TO_KEEP

#

#########################################

# for testing you

can switch mtime for days to mmin for minutes

NUM_FILES_REMOVED=`find

$BACKUP_DIR -maxdepth 1 -mtime +$DAYS_TO_KEEP

-name "*.database.*" | wc -l`

# for testing you

can switch mtime for days to mmin for minutes

if ! find

$BACKUP_DIR -maxdepth 1 -mtime +$DAYS_TO_KEEP

-name "*.database.*" | xargs -I{} rm -rf {};

then

echo "DB purge failed. Exiting."

1>&2

slack_post_bad "DB purge failed on

DIR\n$BACKUP_DIR"

exit 3

fi

#########################################

#

# purge Complete

now notify!

#

#########################################

DB_DIR_INFO=`df -h

$BACKUP_DIR | sed 's/.$/\\\n/g' | tr -d "\n"`

slack_post_good

"Database Purge Complete\nDAYS_KEPT:

$DAYS_TO_KEEP\nFILES_REMOVED:

$NUM_FILES_REMOVED" \

"BACKUP FOLDER

% Left" \

"$DB_DIR_INFO"

echo ""

echo

"================================"

echo ""

echo " DATABASE purge Complete"

echo ""

echo " Databases Removed : $NUM_FILES_REMOVED"

echo ""

echo " Only keeping $DAYS_TO_KEEP DAYS"

echo

"================================"

exit 0

|

Here is the gist

Add a few test files

|

> for i in

{1..9}; do sudo touch -d "16 days ago" My_test.database.test$i;

done

|

Run it!

|

> sudo su -

postgres -c /usr/bin/purge-old-db

|

Add it to /etc/crontab

Now add the backup

to the /etc/crontab to run it every day.

|

> sudo vi

/etc/crontab

|

And add the

following to the end.

|

####################################

#

# Backup DB cron jobs

# m h dom mon dow user

command

22 2 *

* * postgres

/usr/bin/backup-db

33 3 *

* * root /usr/bin/purge-old-db

|

This will run the backup every day at 3:33 AM as the user

postgres.

Save it and let it run J

Last test (for fun… and well for testing)

I could wait a few days to let these tests run their course

or I can speed it up a bit!

I am going to run a backup every 10 minutes and a purge

every 30 minutes (that wipes out the last 30 minutes).

First edit the /usr/bin/purge-old-db

|

> sudo vi /usr/bin/purge-old-db

|

Update these two lines, replacing mtime with mmin (for

minutes)

|

NUM_FILES_REMOVED=`find

$BACKUP_DIR -maxdepth 1 -mmin

+$DAYS_TO_KEEP -name

"*.database.*" | wc -l`

#

for testing you can switch mtime for days to mmin for minutes

if

! find $BACKUP_DIR -maxdepth 1 -mmin +$DAYS_TO_KEEP

-name "*.database.*" | xargs -I{} rm -rf {};

then

echo "DB purge failed. Exiting."

1>&2

slack_post_bad "DB purge failed on

DIR\n$BACKUP_DIR"

exit 3

fi

|

Then edit the cron job to run every 10 minutes for the

backup and every 30 for the purge.

|

> sudo vi

/etc/crontab

|

Here are the changes

|

*/10 *

* * *

postgres /usr/bin/backup-db

*/30 *

* * *

root /usr/bin/purge-old-db

|

Now sit back for an hour or so and watch it work!

And it's working!

References

[1] Pg_dump Posgres

help page

Visited 7/2015

[2] pg_dumpall

Postgres help page

Visited 7/2015

[3] How to thoroughly

purge and reinstall postgresql on ubuntu?

Visited 7/2015

[4] Automated Backup

on Linux

Visited 7/2015

No comments:

Post a Comment